What the latest AI breakthroughs mean for live and archive content

You’ve probably heard of “Generative Pre-trained Transformers” without realizing it. It’s what the “GPT” stands for in ChatGPT, the hugely hyped chatbot developed by OpenAI that recently launched GPT-4.

GPT is based on transformers – a deep learning model that enables us to train an AI with a huge amount of data very quickly, and, for example, generate text in natural language. Newsbridge has been using similar technology to train some of its multimodal artificial intelligence models for a few years — but not as a chatbot. Our Multimodal AI is specifically trained to hear and read the video content stored in our clients’ media hub for incredibly fast and accurate media asset indexing, archiving and search.

Lack of Video Searchability is a Liability

It’s not an understatement to say that broadcasters have a chronic video searchability problem. We hear it from media organizations all the time. 50% of a production or programming team’s time can be spent simply trying to find good, relevant content.

Media archive search capabilities are determined by several factors, metadata being a big one. Initially seen as nothing more than a buzzword, the importance of metadata has evolved in recent years and is now seen as a crucial element of media indexing. “Video without metadata is a liability” is just one memorable line from the panel discussions at last year’s NewsTECHForum in New York.

The state of this metadata can vary greatly. A TV station that embarks on a large archive digitization project without adequately naming its files has no indexing and no metadata to make its archive searchable. Its newly digitized media assets are rendered useless.

Broadcasters give themselves a much stronger base for content discovery if they add tags and written descriptions to all of their media files. However, the addition of tags and descriptions can be in vain if there are limited metadata fields in the MAM (media asset manager); the MAM search engine fails to ‘talk’ to the metadata or; there’s no semantic search capability — meaning if you don’t type the specific keywords linked to your tagged media, you’ll never find the shots you need. Users these days expect a MAM search engine to behave like a plain text web search, but unfortunately this level of search simplicity is often not the reality.

This is where AI — particularly when it leverages the power of natural language models — is a game changer for content discovery.

The New Era of AI For Media Indexing

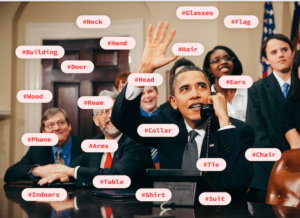

The use of AI for media logging is not new, but AI processing is quite expensive, and the results are often pretty lackluster. Off-the-shelf, unimodal AIs will detect a microphone here, a chair over there. They may detect that there’s a person in the shot wearing a robe, and a wooden gavel on a table. But the AI will not put all these elements together to identify and log that the photo or video sequence shows a judge in a courtroom. Broadcasters using unimodal — or monomodal — AI products are left with a jumble of tags, and a media asset index that is more prone to search bias and false positives.

Traditional AI models often leave broadcasters with a jumble of useless tags.

Even OpenAI’s impressive Bing chatbot recently lamented in its two-hour conversation with New York Times columnist Kevin Roose its inability to see images and videos.

Newsbridge’s AI is doing just that.

Being multimodal, we merge detection results from face, text, objects, pattern (logos), transcription, and we’ve just added the ability to detect landmarks, monuments, landscapes and cityscapes, including aerial shots.

Newsbridge’s new location-based AI detection and search feature makes finding video clips of landmarks in a media archive lightning fast.

This coupled with Pre-Trained Transformer technology means we can train and fine-tune a huge amount of user data very fast and in parallel, and generate the natural language descriptions and summaries that make a big difference to media production workflows.

Imagine the sheer amount of time saved by an archive department when instead of someone having to watch and manually transcribe, describe and summarize footage, the AI does it in a flash, and provides a description as clear and concise as “HRH Prince William and Catherine Middleton walking out of Westminster Abbey in London, England, on their wedding day.”

The benefits of this next generation AI technology for broadcasters is profound. At the news desk, journalists and editors no longer have to roll through hours of raw footage, marking in and out. Instead, they find the clips they need to build a story in less than two seconds — simply by searching their archives and live streams in the same, intuitive way they search the web. 24/7 Arabic news channel Asharq News is using the Newsbridge AI to index content in both Arabic and English, meaning journalists can search in one language and bring up the footage they need in another.

For producers and programmers looking for cost-effective ways to fill hours of air time, multimodal AI puts their network’s rich history of shows spanning decades right at their fingertips. Fully indexed and searchable scene-by-scene, it’s now quick and easy to bring archive content back to life, and make it live forever.

Our low energy consumption AI model, which maximizes the use of CPUs (central processing units) rather than always using GPUs (graphics processing units), uses seven times less energy than traditional AIs. This means large-scale indexing and archive projects involving thousands of hours of content are no longer just a pipe dream for media managers.

It’s Time to Join The AI Revolution

Video search quality is hugely reliant upon our capacity to index. We know from working with TV channels around the world that the repetitive tasks of media logging, transcribing and summarizing must be very consistent to be effective. This is where the use of AI language models in production workflows is revolutionary.

The current hype around ChatGPT has shed light on AI advancements that began a few years ago and are now accelerating. Rather than fear it, broadcasters should fully embrace the opportunity to harness the power of multimodal AI to finally address their video searchability problems, and uncover the diamonds hiding in their media archive.

Hear more from Frederic Petitpont on the AI revolution of video indexing and search at Programming Everywhere, April 16 in Las Vegas.

For more information about Newsbridge’s groundbreaking multimodal AI technology, cloud Media Hub, Live Asset Manager and Media Marketplace, please visit newsbridge.io and be sure to schedule a demo of their latest innovations at NAB Show, booth W2073.

Comments (0)